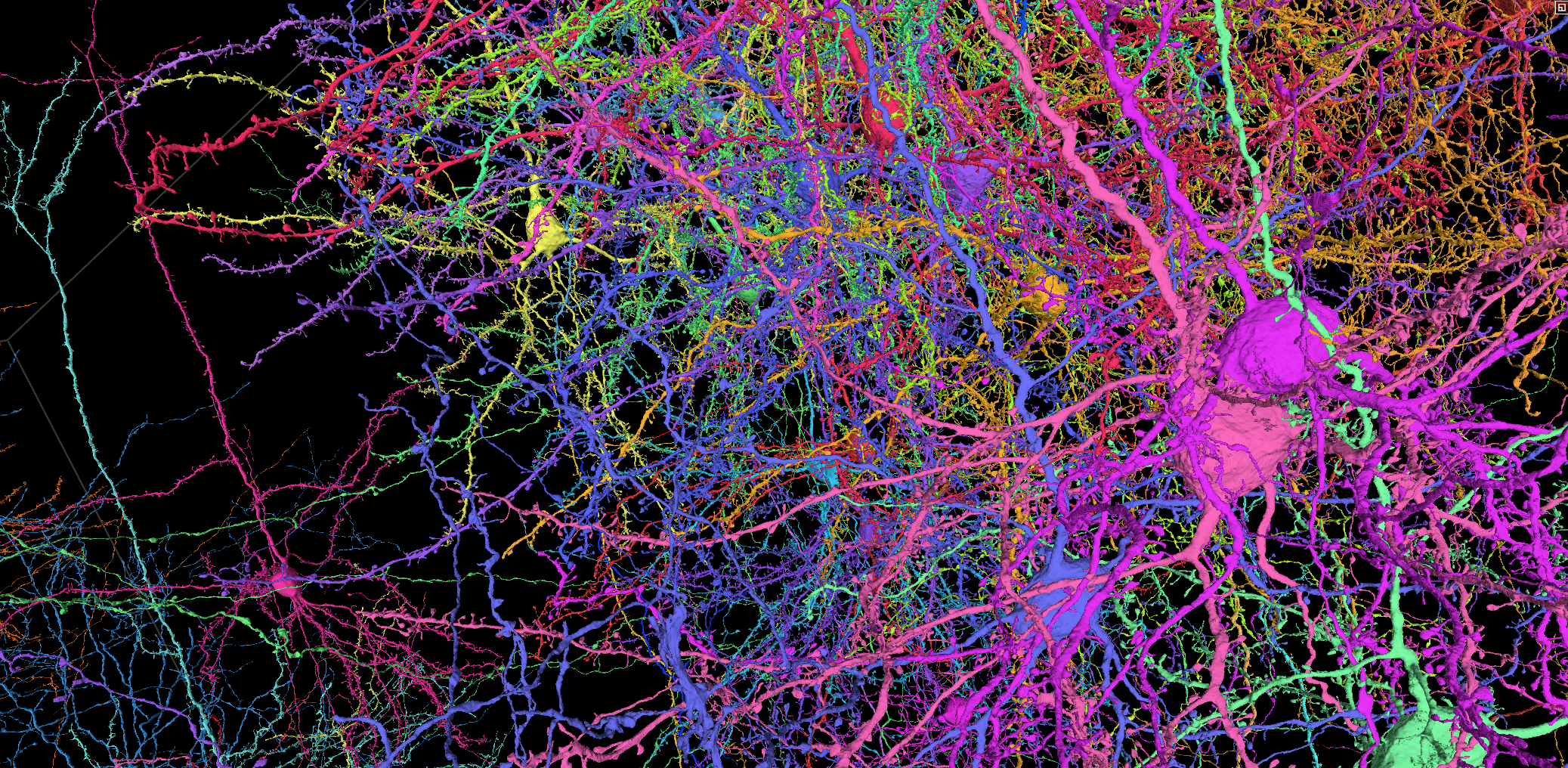

A new deep learning tool from Keith Hengen allows scientists to give neurons a “computational fingerprint,” work that could significantly advance our understanding of the building blocks of thought.

The 86 billion neurons of the human brain are involved in constant banter, sending and receiving codes written in strings of electrical impulses. Listening to these conversations can offer insight into the basis of behavior and thought, but the language of neurons — which have hundreds of subtypes — has been hard to crack. A key question is whether diverse neurons, like people, speak different languages.

The language — or languages — used by brain cells may remain untranslated, but that hasn’t stopped Keith Hengen, assistant professor of biology, from listening. A new study published in Cell Reports details how Hengen and Eva Dyer of the Georgia Institute of Technology created a new deep learning tool to help decode the chaos of cognition.

The tool, which goes by the whimsical name LOLCAT (short for LOcal Latent Concatenated ATtention), allows scientists to identify neuron types simply by looking at the timing of their activity, a sort of “computational fingerprint.” This opens the possibility that any researchers recording the activity of neurons could identify underlying cell types without additional tools — a major advance in understanding the building blocks of thought.

The results of the LOLCAT study show unexpected patterns and structure in the flow of information through neural networks. “You can actually learn the vocabulary of one cell type and that vocabulary is preserved in other cells of the same type, and is constant in different cognitive environments,” Hengen said.

Hengen and his team, including graduate student Aidan Schneider, research technician Sahara Ensley, and Georgia Tech graduate student Mehdi Azabou, unleashed LOLCAT in two types of mouse brain recordings and a computer model complete with 230,000 “neurons” representing 16 different cell types.

Hengen notes that LOLCAT was made possible thanks to a massive database of recordings of activity in the visual cortex of mouse brains conducted by the Allen Institute, an independent research institution based in Seattle. “The Allen has created an incredible set of resources, from datasets to tools, and everything they do is completely publicly available,” Hengen said. “We’re extremely grateful for all of their work.”

For both the real mice and the computer model, LOLCAT could identify the cell types with a speed and accuracy that exceeds any other current methods. Hengen says LOLCAT will inevitably become more accurate and efficient over time as it trains on increasingly detailed datasets.

Neural information encoding is typically measured on the scale of milliseconds. But LOLCAT considered time scales ranging from milliseconds up to as long as 30 minutes, a design breakthrough that revealed unexpected results. LOLCAT’s ability to decode neuron types actually improved over longer readings. This suggests that the brain can take advantage of multiple timescales to simultaneously carry distinct pieces of information without interference, Hengen said. Potentially, he explained, brain cells could essentially do the same thing that LOLCAT does and identify other cells simply by tracking their firing patterns.

Hengen — along with Ralf Wessel, professor of physics, and Likai Chen, assistant professor of mathematics and physics — is one of the lead researchers involved in Toward a Synergy Between Artificial Intelligence and Neuroscience, one of nine research clusters funded by the Incubator for Transdisciplinary Futures.

The cluster is pursuing projects that combine the insights of computer science and neuroscience to better understand both realms. Researchers will advance their understanding of the brain using deep learning tools like LOLCAT, and AI will improve by emulating the networks of brains, still the most powerful computational devices on Earth. “Perhaps we can take some of the solutions that nature has stumbled onto and use those in the application of deep learning,” Hengen said.